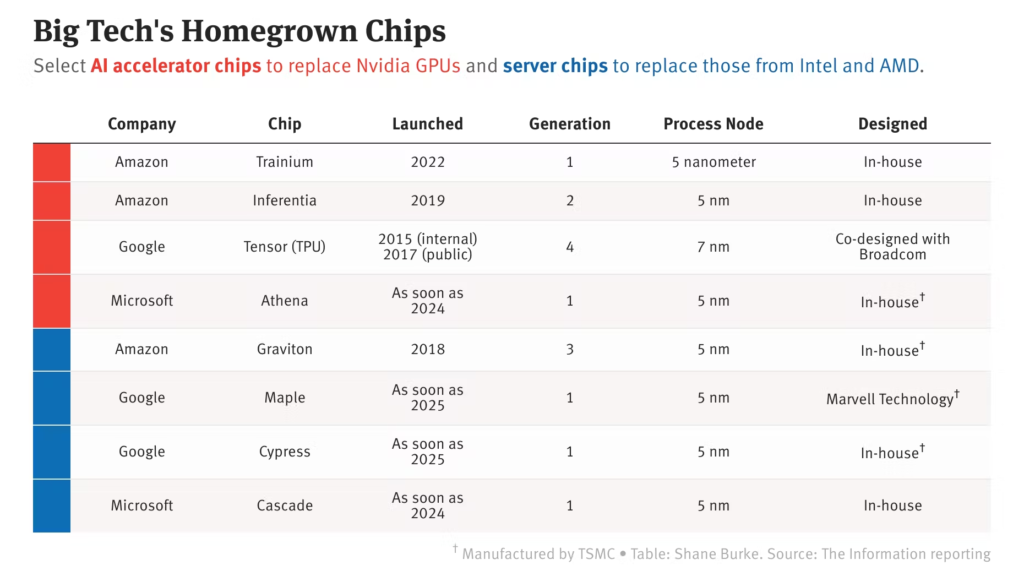

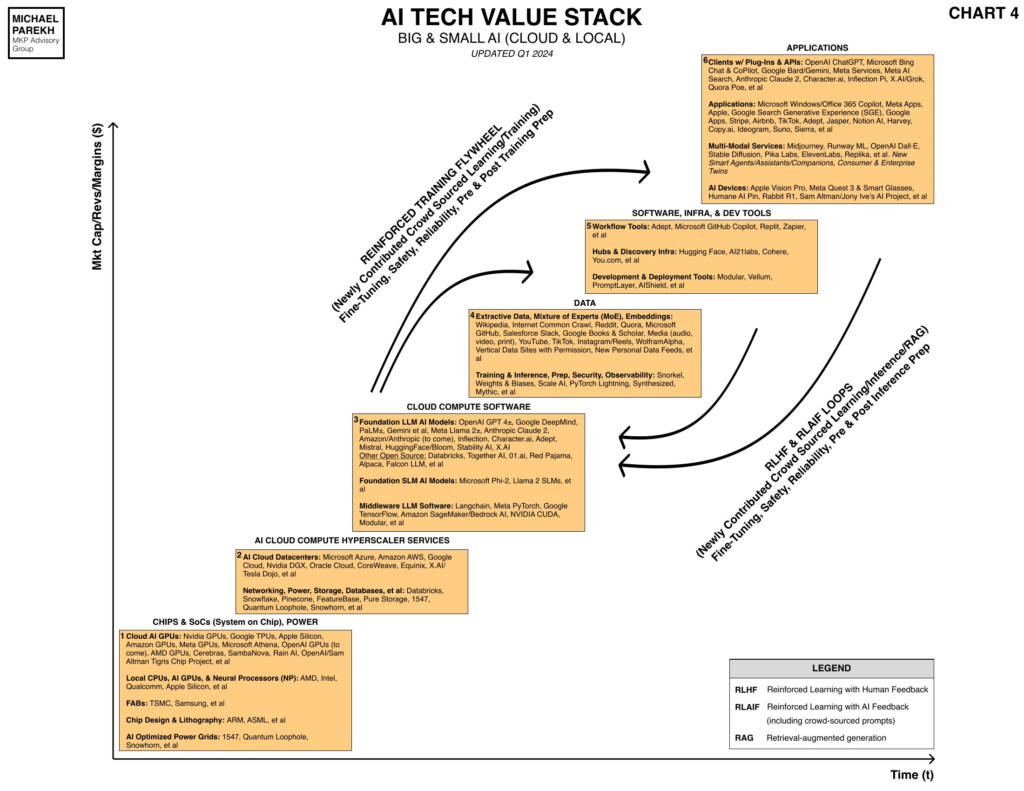

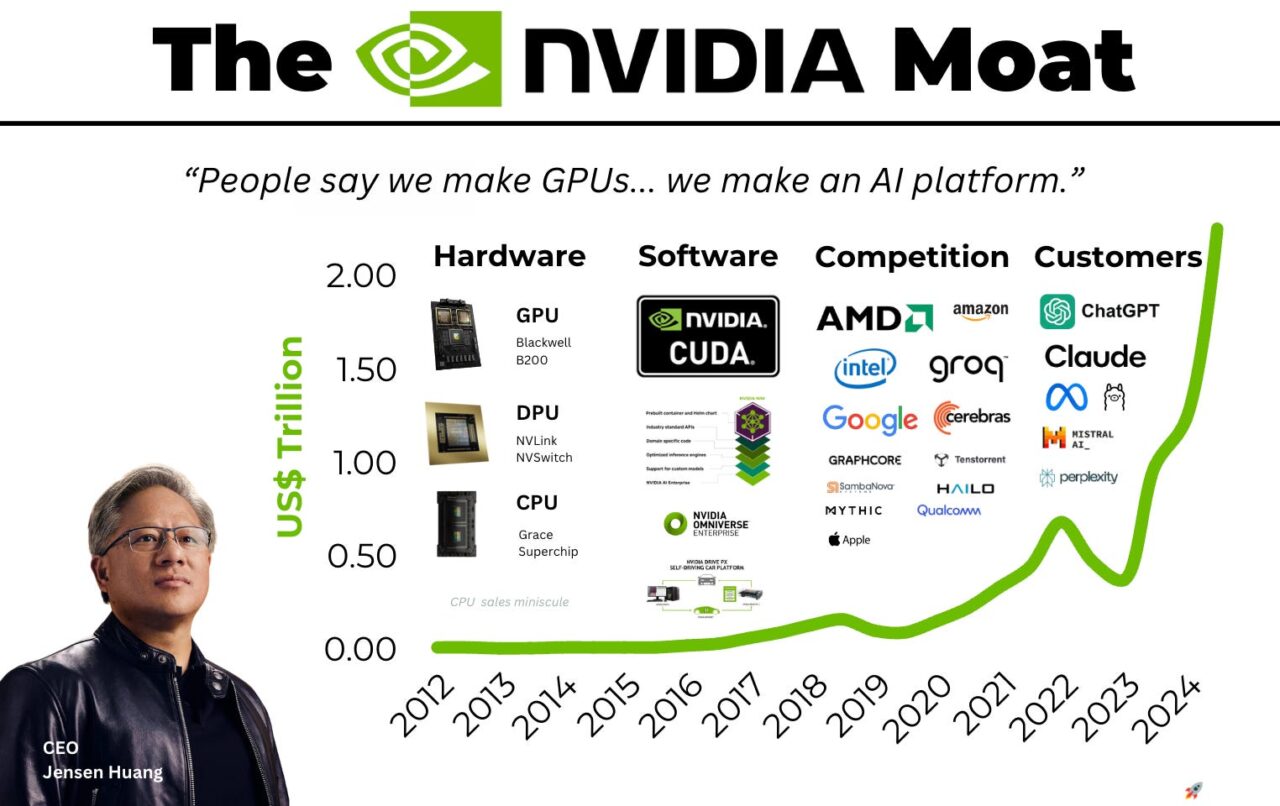

I believe that NVIDIA’s moat is currently quite wide, but it appears to have a significant weak point that clever researchers and well-funded newcomers are beginning to try to exploit. NVIDIA initially developed its GPUs and CPUs for non-artificial intelligence specific activities. The computing power provided turned out to be translatable into deep learning and other artificial intelligence capabilities, placing NVIDIA in a particularly strong position as demand for artificial intelligence began to increase exponentially. However, the key point here is that it developed its significant infrastructure suitable for computing activities that are not specifically designed for artificial intelligence. This does not mean that NVIDIA is not aware of it; they are absolutely and will do everything in their power to develop systems and infrastructures that commit to focusing on artificial intelligence first and foremost in the units developed in the future.

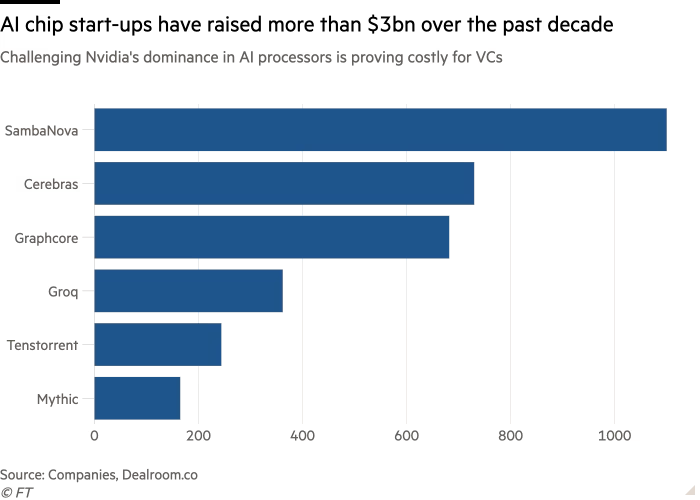

However, there is still a certain window here for new companies to potentially gain a share of the market if they challenge NVIDIA, Google, and other leading tech companies in developing chips specifically designed for AI workloads from scratch. The main competitive advantage that these AI-specific chips would have is efficiency in AI workloads, which represents a huge strength as consumers begin to expect faster inferences from AI systems. It is not unlikely that a company much smaller than NVIDIA could effectively achieve all of this, but in my opinion, it takes the right teams with the right funding and the right ingenuity to be able to properly manifest these projects and then have them adopted on a large scale.

Companies like Groq and other less well-known startups are already doing this. But in my opinion and from my research, Groq seems to have the highest advantage here and should offer a rather convincing rival to NVIDIA in the artificial intelligence market in the coming years. It offers a Tensor Streaming Processor architecture that, unlike traditional GPUs and CPUs, provides a deterministic and software-defined approach. This reduces latency and increases efficiency. Moreover, its single-core design allows for high-speed data processing, making it much more attractive for AI-based calculations. Groq’s chips are designed to deliver faster performance in certain AI activities than traditional GPUs, as they are designed to perform trillions of operations per second.

Other major competitors to NVIDIA also include Cerebras and SambaNova. Cerebras offers a very powerful single-chip processor and SambaNova offers an integrated hardware and software system powered by AI-specific chips.

Cerebras has a huge AI chip called the Wafer Scale Engine, which is much larger than traditional chips and takes up almost an entire silicon wafer. Therefore, it has unprecedented computing power and stands out as a significant competitor. Its latest version, called the Wafer Scale Engine 2, contains 2.6 trillion transistors and 850,000 cores, making it the largest chip ever built. This allows for fast and efficient AI activities while minimizing data movement.

SambaNova’s integrated hardware and software solution through its DataScale system is powered by chips that use its reconfigurable dataflow architecture. This allows for adaptable and scalable AI processing and is quite interesting as it offers flexibility to companies that need a range of different levels of processing that vary depending on the needs of their machine learning activities at specific times.

We should remember that NVIDIA will not be dethroned from its first place in AI infrastructures due to its growing moat, but the part of the market that focuses on the current large gap in NVIDIA’s strategic focus, which it now needs to bridge by readjusting, could do very well. I think the challenge will be whether the companies trying to compete with NVIDIA in this sense will be able to remain vital once NVIDIA adapts accordingly to the technological change and comes back with mass production. I believe that the only option for smaller competitors is to focus strictly on quality. I believe that ingenuity and design strength could far outperform NVIDIA, even for a long time. Even though NVIDIA might be the biggest, it might not be the best.

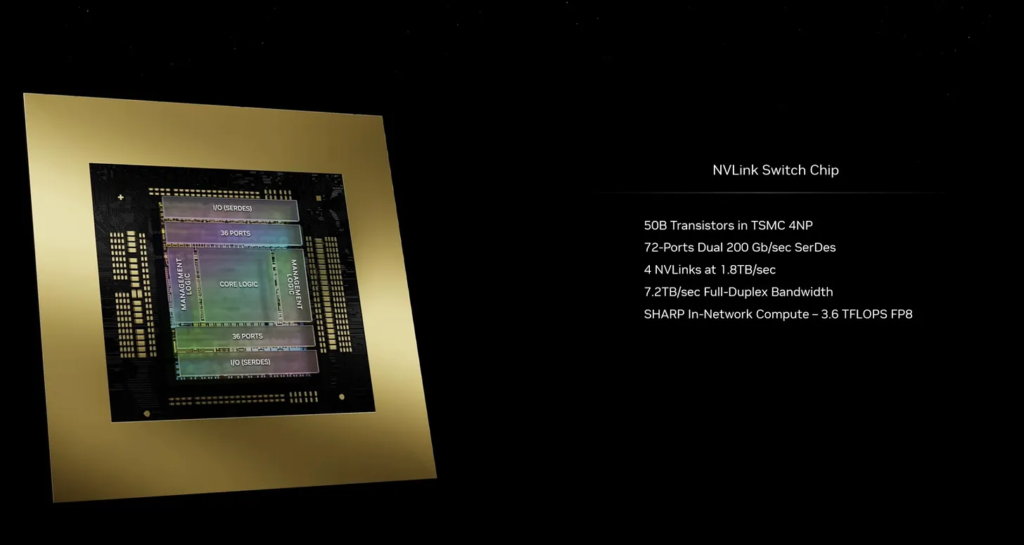

If NVIDIA is eventually seen as the company with the largest offering of computing infrastructures but does not offer the best AI-specific chips in terms of efficiency, at least for a while, this could mean that the stock valuation is too high at the moment. What NVIDIA offers, which I believe is compelling and its most significant competitive advantage, is a full-stack ecosystem for high-computing activities, including AI, which it continues to develop. This is probably what the market wants and requires through the very high valuation. However, if suddenly NVIDIA were seen as the main provider of this, but as the second best in terms of AI-specific workloads, I think that NVIDIA’s valuation could undergo a moderate correction. In light of this risk for NVIDIA, I think it is wise for NVIDIA shareholders to be cautious in the short-medium term. My opinion is that NVIDIA’s valuation at this moment is not too high based on the long-term dependence that the world will have on the NVIDIA ecosystem, but in the short-medium term the stock valuation could be seen as too optimistic given that some major competitors of NVIDIA are emerging that should moderately, but successfully, disrupt the idea of NVIDIA as such a dominant provider of quality in AI workloads. However, NVIDIA’s continuous AI innovations and integrations could mitigate these risks, especially considering its funding power compared to smaller and newer companies.

I think that Jensen Huang is an exceptional entrepreneur and executive. He has inspired many of my thoughts and works and it is well documented that he actively seeks out and evaluates NVIDIA’s competition daily when he realizes that it is true that other companies are trying to take away NVIDIA’s market leader position. This presents quite powerfully what other newcomers are up against, and I believe that his ethic is the foundation of the wide and growing moat that NVIDIA shareholders are getting used to. NVIDIA, without exaggeration, is an exceptional company.

As I mentioned above in my analysis of operations, I believe that NVIDIA has its strength in its full-stack ecosystem. In this area, I believe it will be substantially impossible for competitors to effectively compete for NVIDIA’s market share in a significant way. This is why I think Mr. Huang has done an excellent job in consolidating and solidifying NVIDIA’s position as the most advanced (and well-funded) technology company that develops AI tools. This is also why I believe that NVIDIA remains a fantastic long-term buy.

I believe that NVIDIA’s CUDA deserves special mention here, as it is the architecture that enables a full range of customers to enable NVIDIA’s GPUs for generic processing. I think this is incredibly smart for NVIDIA to do, as it allows them to leverage the power of their own units for multiple purposes through software integration. What it does is democratize the power of NVIDIA’s hardware infrastructure, but what Groq and other startups might be able to do is focus on the niche of AI-specific workloads and design hardware that is absolutely focused only on these tasks. Undoubtedly, this will be faster compared to moderating the high-power units for variable workloads, including AI. However, the versatility of generic GPUs offers broader applicability, which is also crucial in environments that require multiple types of workloads managed simultaneously.

While NVIDIA has to face competition from AMD (AMD), which has also developed an AI ecosystem called ROCm, AMD’s platform is significantly less complete and currently does not have a set of AI-specific features like NVIDIA’s. Instead, AMD’s main competitive focus is to provide high-performance processing from its chip designs at a competitive cost. AMD is still aggressively pursuing improvements in its GPU capabilities and the ROCm ecosystem to support AI and machine learning workloads. For some aspects, if AMD were to focus skillfully on the specific development of AI chips, as Groq does, then it could have a much more significant competitive advantage over NVIDIA. However, this should not underestimate the fact that AMD is generally strategically balancing its attention to AI in its total product portfolio. AMD, like NVIDIA, was not initially an AI company.

While NVIDIA is a leader in the field of AI computing infrastructures, it has become evident that there is a momentary opening for innovators to capture the latency in NVIDIA’s chip configurations. In the near future, I believe it is possible that NVIDIA will be seen as the largest and best AI development ecosystem, but it could find itself, at least for a period, outpaced by competition in chip design from smaller companies focused on direct market quality of chips and units specifically designed for AI. Ultimately, NVIDIA was not initially an AI company; it might take a company dedicated to AI from the beginning to truly deliver the quality that the AI consumer market is about to demand.

Lascia un commento

Devi essere connesso per inviare un commento.